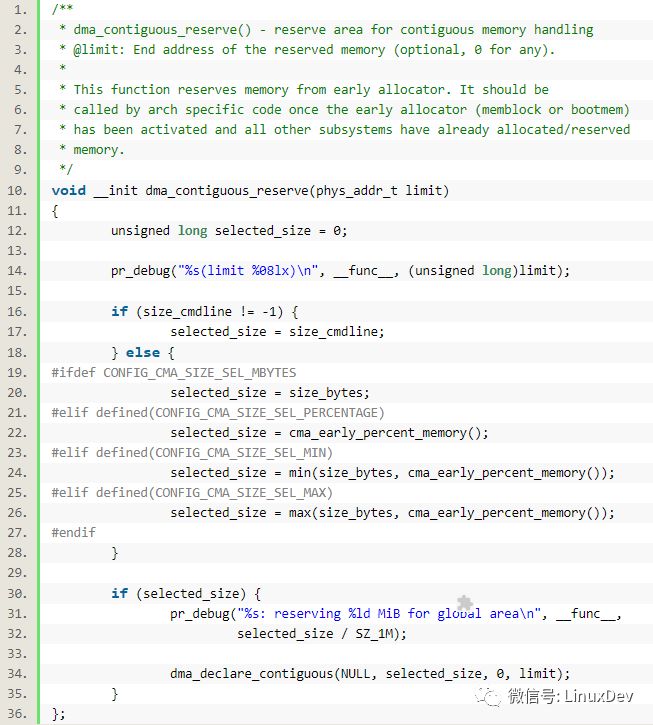

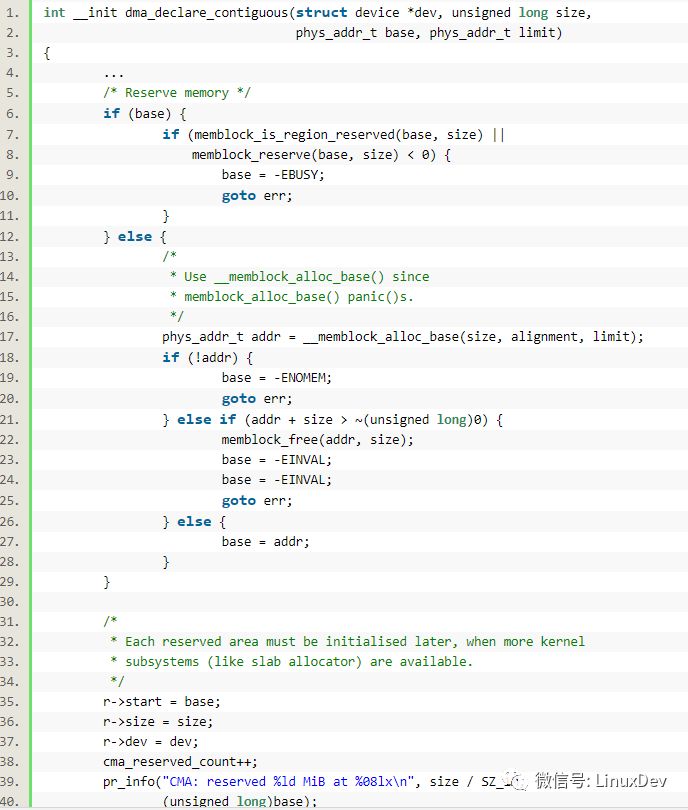

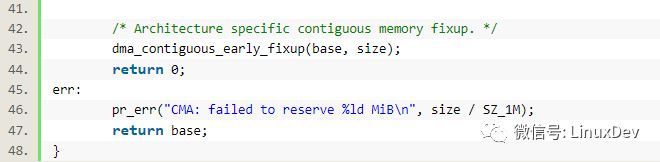

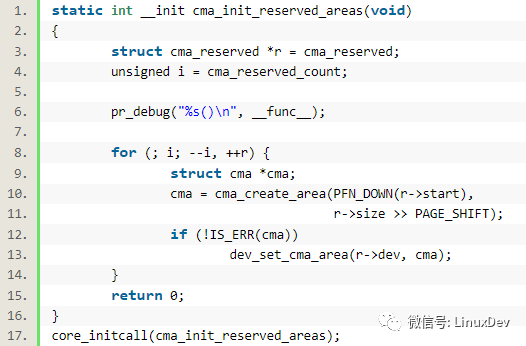

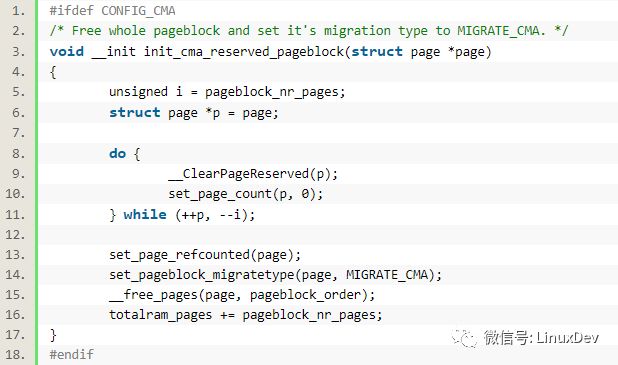

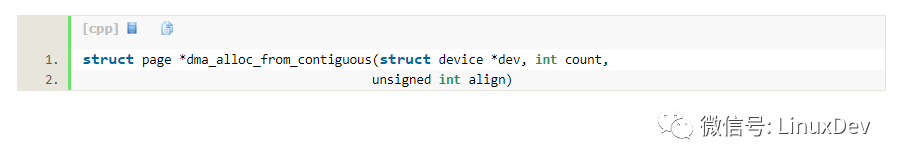

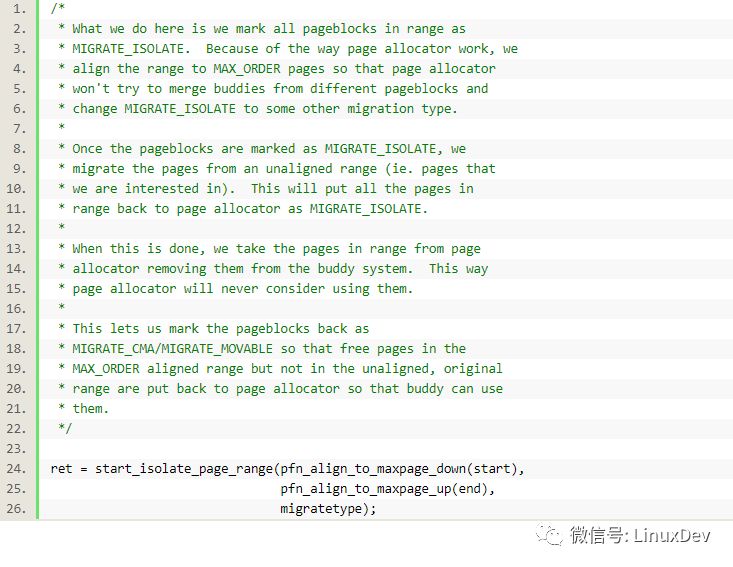

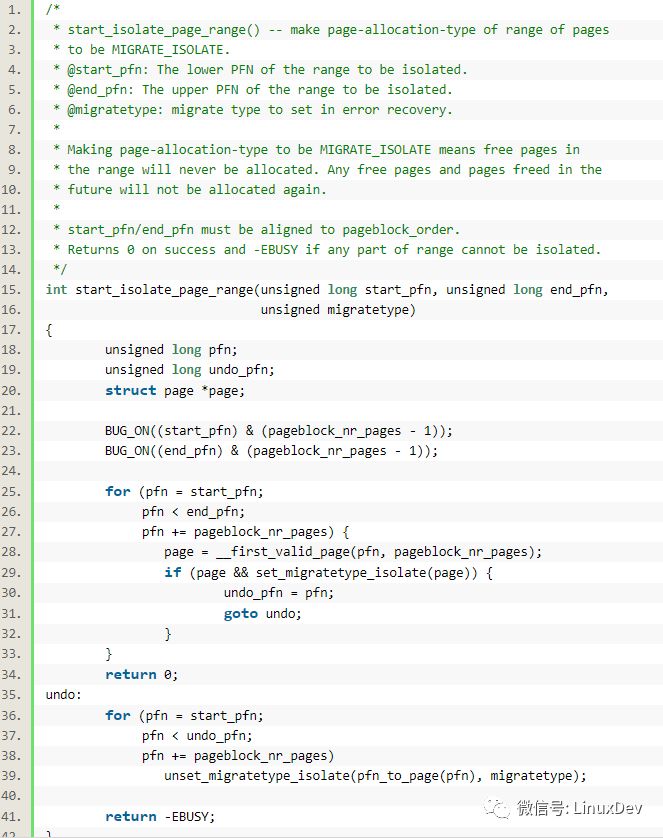

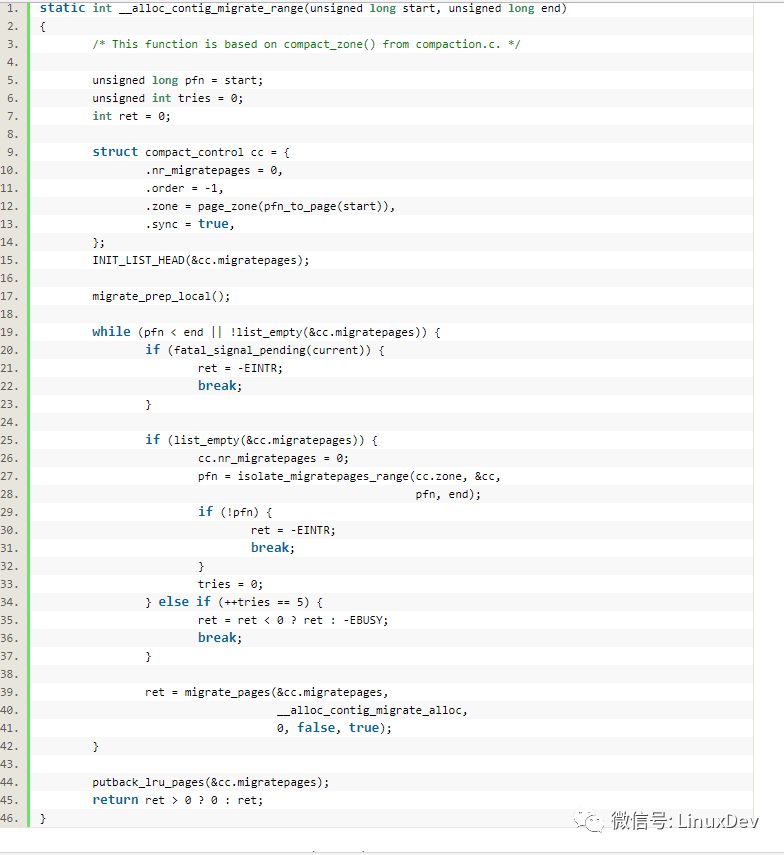

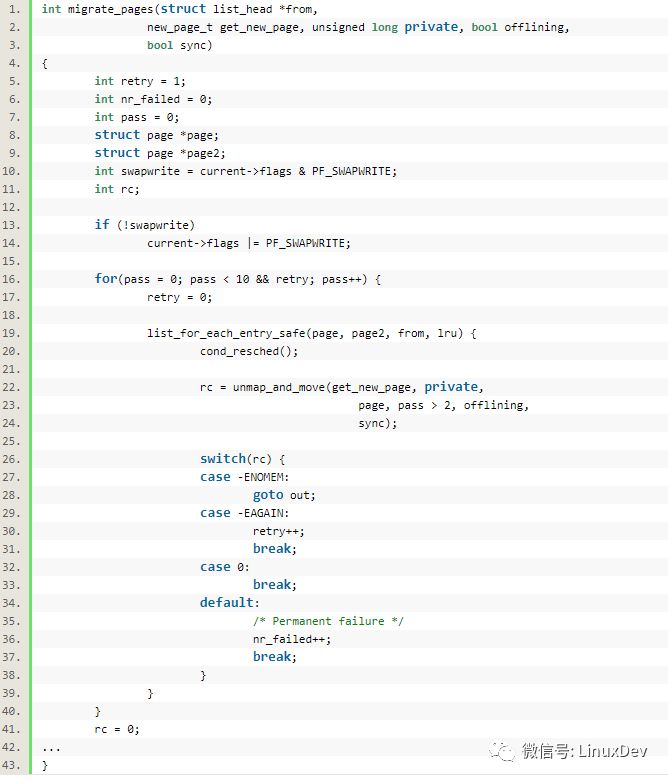

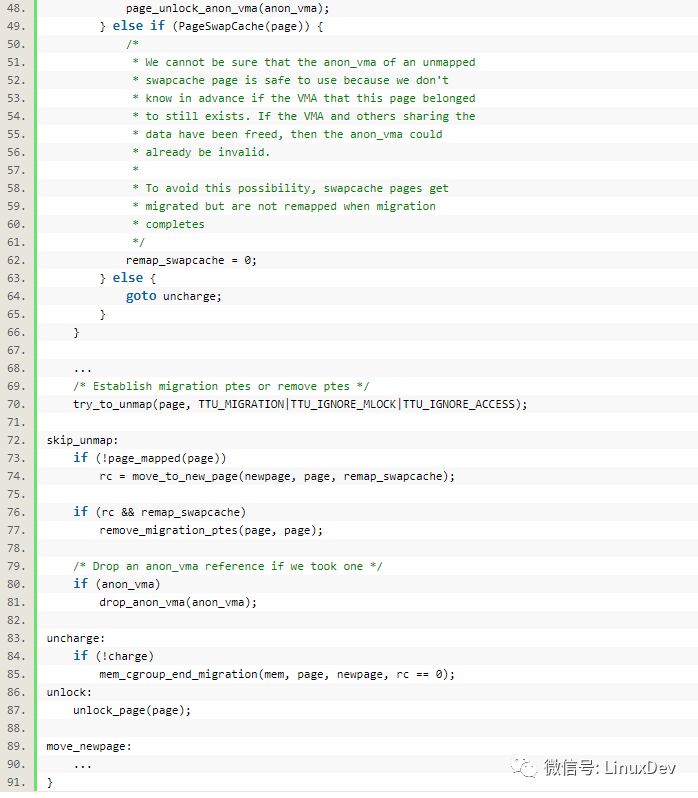

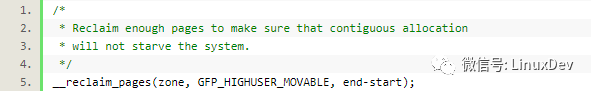

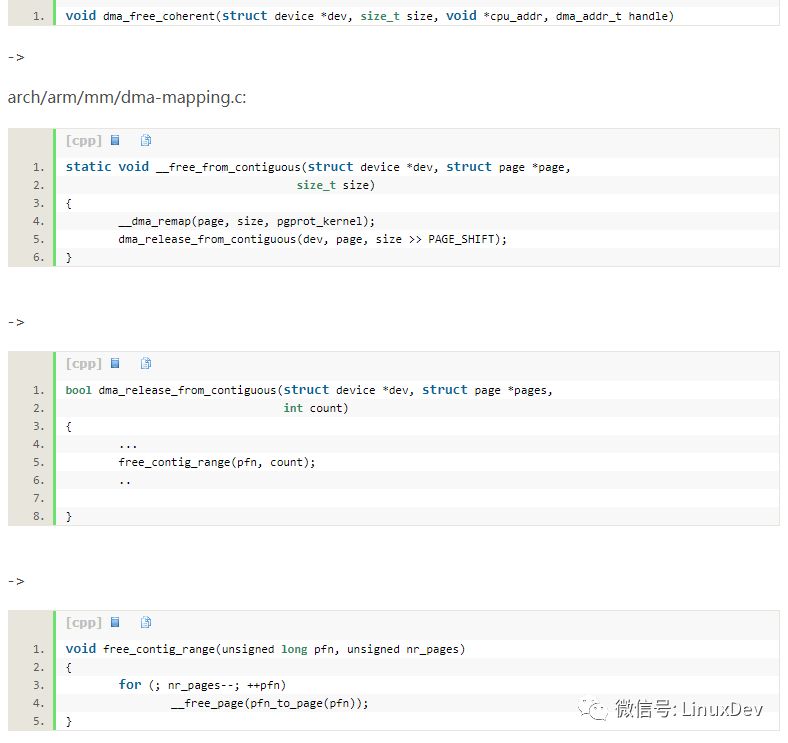

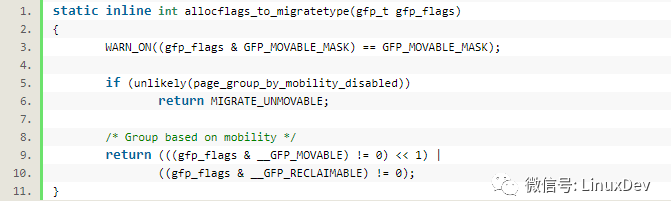

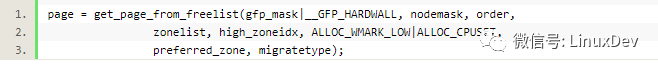

This is the article I wrote in the first half of 2012, and the WeChat public account is now published again. When we use embedded Linux systems such as ARM, a headache is that GPU, Camera, HDMI, etc. need to reserve a large amount of contiguous memory. This part of the memory is not used normally, but the general practice must be reserved. Currently, Marek Szyprowski and Michal Nazarewicz have implemented a new Contiguous Memory Allocator. Through this mechanism, we can do not reserve memory, these memories are usually available, and are only assigned to Camera, HDMI and other devices when needed. Let's analyze its basic code flow. Declare contiguous memory Arm_memblock_init() in arch/arm/mm/init.c during kernel startup calls dma_contiguous_reserve(min(arm_dma_limit, arm_lowmem_limit)); This function is located at: drivers/base/dma-contiguous.c The size_bytes is defined as: Static const unsigned long size_bytes = CMA_SIZE_MBYTES * SZ_1M; By default, CMA_SIZE_MBYTES will be defined as 16MB, from CONFIG_CMA_SIZE_MBYTES=16-> It can be seen that the contiguous memory area is also obtained by __memblock_alloc_base() in the early stage of kernel startup. In addition: Core_initcall() in drivers/base/dma-contiguous.c will cause cma_init_reserved_areas() to be called: Cma_create_area() will call cma_activate_area(), and the cma_activate_area() function will be called for each page: Init_cma_reserved_pageblock(pfn_to_page(base_pfn)); This function sets the page to MIGRATE_CMA type via set_pageblock_migratetype(page, MIGRATE_CMA): At the same time, the __free_pages(page, pageblock_order); which is called will eventually call __free_one_page(page, zone, order, migratetype); the related page will be added to the free_list of MIGRATE_CMA: List_add(&page->lru, &zone->free_area[order].free_list[migratetype]); Apply for contiguous memory The application for contiguous memory still uses the dma_alloc_coherent() and dma_alloc_writecombine() defined in the standard arch/arm/mm/dma-mapping.c, which indirectly call drivers/base/dma-contiguous.c -> -> Int alloc_contig_range(unsigned long start, unsigned long end, Unsigned migratetype) Need to isolate the page, the role of the isolation page can be reflected by the code's comments: Simply put, the relevant pages are marked as MIGRATE_ISOLATE so that the buddy system will not use them anymore. Next call __alloc_contig_migrate_range() for page isolation and migration: The function migrate_pages() will complete the migration of the page. During the migration process, the new page will be applied via the incoming __alloc_contig_migrate_alloc() and the old page will be paid to the new page: The unmap_and_move() function is more critical, it is defined in mm/migrate.c With unmap_and_move(), the old page is migrated past the new page. The next step is to recycle the page. The effect of the recycle page is that the system becomes memory hungry after taking continuous memory: -> -> Free contiguous memory When the memory is released, it is also relatively simple, directly: Arch/arm/mm/dma-mapping.c: Return the page to buddy. Migratetype of kernel memory allocation When the kernel memory is allocated, the flag is GFP_, but GFP_ can be converted to migratetype: When you apply for memory, you will compare the free_list of the migration type match: In addition, the author has also written a test program to test the functions of the CMA at any time: /* * kernel module helper for testing CMA * * Licensed under GPLv2 or later. */ #include #include #include #include #include #define CMA_NUM 10 Static struct device *cma_dev; Static dma_addr_t dma_phys[CMA_NUM]; Static void *dma_virt[CMA_NUM]; /* any read request will free coherent memory, eg. * cat /dev/cma_test */ Static ssize_t Cma_test_read(struct file *file, char __user *buf, size_t count, loff_t *ppos) { Int i; For (i = 0; i < CMA_NUM; i++) { If (dma_virt[i]) { Dma_free_coherent(cma_dev, (i + 1) * SZ_1M, dma_virt[i], dma_phys[i]); _dev_info(cma_dev, "free virt: %p phys: %p", dma_virt[i], (void *)dma_phys[i]); Dma_virt[i] = NULL; Break; } } Return 0; } /* * any write request will alloc coherent memory, eg. * echo 0 > /dev/cma_test */ Static ssize_t Cma_test_write(struct file *file, const char __user *buf, size_t count, loff_t *ppos) { Int i; Int ret; For (i = 0; i < CMA_NUM; i++) { If (!dma_virt[i]) { Dma_virt[i] = dma_alloc_coherent(cma_dev, (i + 1) * SZ_1M, &dma_phys[i], GFP_KERNEL); If (dma_virt[i]) { Void *p; /* touch every page in the allocated memory */ For (p = dma_virt[i]; p < dma_virt[i] + (i + 1) * SZ_1M; p += PAGE_SIZE) *(u32 *)p = 0; _dev_info(cma_dev, "alloc virt: %p phys: %p", dma_virt[i], (void *)dma_phys[i]); } else { Dev_err(cma_dev, "no mem in CMA area"); Ret = -ENOMEM; } Break; } } Return count; } Static const struct file_operations cma_test_fops = { .owner = THIS_MODULE, .read = cma_test_read, .write = cma_test_write, }; Static struct miscdevice cma_test_misc = { .name = "cma_test", .fops = &cma_test_fops, }; Static int __init cma_test_init(void) { Int ret = 0; Ret = misc_register(&cma_test_misc); If (unlikely(ret)) { Pr_err("failed to register cma test misc device!"); Return ret; } Cma_dev = cma_test_misc.this_device; Cma_dev->coherent_dma_mask = ~0; _dev_info(cma_dev, "registered."); Return ret; } Module_init(cma_test_init); Static void __exit cma_test_exit(void) { Misc_deregister(&cma_test_misc); } Module_exit(cma_test_exit); MODULE_LICENSE("GPL"); MODULE_AUTHOR("Barry Song <>"); MODULE_DESCRIPTION("kernel module to help the test of CMA"); MODULE_ALIAS("CMA test"); Apply for memory: # echo 0 > /dev/cma_test Free memory: # cat /dev/cma_test Disposable Vape Pen,Disposable Vapor Pen,Disposable Vapes,Vape Disposable Pod Shenzhen Xcool Vapor Technology Co.,Ltd , https://www.szxcoolvape.com